Query Store is my favorite way to gather information about problem queries and plans, and I wanted to share some information on the useful metrics I use most.

The first two are obvious, but there’s a difference between them. The last two are not obvious but offer an unusual utility. I also wanted to explain why I use logical reads and mostly ignore physical reads.

CPU and Duration

Most tools and scripts are going to focus on queries’ CPU and duration, and they certainly should. I mention them to point out one important difference.

The CPU required for a given query depends on many factors: the operations in the plans, the physical reads generated, parallelism, etc. But this should be mostly consistent for the same query and plan with the same parameters as inputs.

Duration can vary more wildly. This could be driven by blocking, system load, resource contention, or other waits. The circumstances for these can vary wildly and will depend more on the current state of the server and the other queries running.

We should consider the duration of the query we are monitoring and measure the improvements we make while tuning it based on its duration. But duration isn’t a good measure of the amount of work a query is doing. There are many factors that can inflate a query’s duration by preventing the query from operating.

A high-duration query could just be a victim; the culprit could be the query blocking it or causing a resource issue. A high CPU query is actively doing more work than a query with less CPU.

Logical Reads

I’ve written about logical reads before and have long felt they are a sneaky good metric for performance.

If you have CPU-intensive queries or queries generating a lot of physical reads, they tend to be obvious. You can see the effects of either in Task Manager, much less any SQL Server specific tool. A quick check of your waits will make the nature of your problem obvious.

Logical reads don’t show up in the same way. If you are reading a large number of pages that are already in memory, it won’t tax your CPU or cause your disks to spike. There can be a large amount of work going on, but it’s not obvious where the work is taking place. And while logical reads are faster than getting data from spinning disks, that doesn’t mean it is instantaneous.

The second point about logical reads that makes them a good metric is that they are a consistent indicator of actual work. A given plan against the same source with the same parameters should read a similar number of pages. So you can compare two plans or queries just based on this metric; the query with more logical reads does more work.

Physical reads are more random; whether a query generates a lot of physical reads depends on what is in the cache at the moment. Running the same query again moments later may result in no physical reads. A truly huge query (or inefficient plan) could require more data than would fit into the cache, which would force more physical reads. That would generate physical reads more consistently than a smaller query.

If I’m going to look for queries that I may want to tune, I’ll look for my top queries by logical reads, not physical reads.

Execution Count

This is another sneaky good metric. It gives insights into the patterns in our applications and can lead to interesting conversations with developers.

Let’s say in Query Store, you see that a given plan is executed 1 million times a day. Is that a lot for the application? It could be hard to say. But if you consider that’s more than 11 executions per second all day, that sounds more significant.

Does that seem to match the pace of our application and the number of users in that database? It’s not obvious where to draw the line.

When I see oddities from the execution count, I may well ask the application developers, “Should this query be running 11 times a second all day?” Sometimes the dev can give you a firm no. That leads to a very different path to improve our database performance; we may do nothing while the application developers update behavior on their end. Or maybe we both make changes.

The execution count gives us insight into the behavior of our applications, which can be invaluable.

Rowcount

Not at all the same as the other metrics, but I’ve found a use for this metric recently that I wanted to share.

Imagine you are tuning a complex stored procedure. You start by finding the statement with the highest duration or CPU. The query joins several tables, and you can see which indexes are used by the plan.

You can review the statistics for the indexes to see how many rows you expect a given operation to return. That may be more accurate than the numbers in an estimated plan.

But what if there is a temp table in your query? There are no statistics to look at. You can’t be sure how many rows to expect from the temp table unless you are very familiar with the process. It could depend highly on the inputs to the procedure, and it could be key to how the query performs.

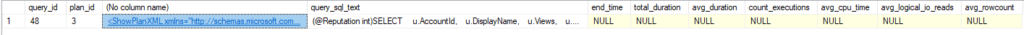

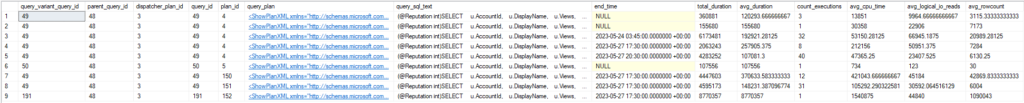

We can get that answer by finding the query that populates the temp table and checking its avg_rowcount in sys.query_store_runtime_stats. It might be wise to check min_rowcount and max_rowcount as well, to see how much variance there is.

This information gives useful context for the original query. It may help explain why one plan outperforms another, or suggest a different join order for the query.

An odd case

All of these metrics are useful, but sometimes we need more than one to see the whole picture.

A few years ago, I was reviewing an unfamiliar server with a high CPU. I used CPU as my first metric and found the top query. It wasn’t a bad plan (it was simple enough to be hard to improve), but the pattern was very odd. The query was executed several thousand times per hour during the day, but tens of thousands of times per hour at night.

That disparity in execution count was odd, and it was obvious in Database Performance Analyzer (and no, this blog isn’t sponsored, but the hourly graphs made it very obvious). Why would it behave this way? Is this database being used by customers in APAC?

I said at one point it was almost like this query was being called from a hard loop in the application; if the server was less active at night, there would be more resources to run the query.

That turns out to have been the exact cause. The loop in question should have had a time delay, but that delay was set to 0 milliseconds. The biggest clue in this case was the change to the execution count over time.

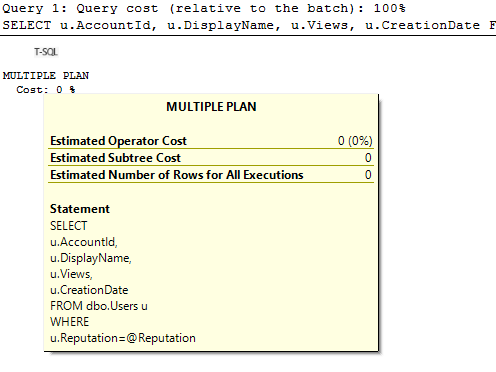

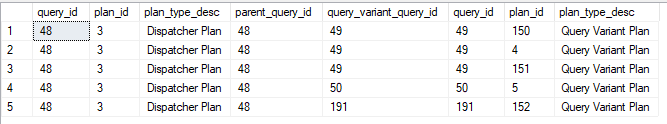

A quick sample

Here’s an example query including all of these fields. It’s aggregated and includes a few suggested filters.

SELECT

qsq.query_id,

qsp.plan_id,

SUM(rs.count_executions) as count_executions,

SUM(rs.avg_duration * rs.count_executions) as total_duration,

SUM(rs.avg_duration * rs.count_executions) / SUM(rs.count_executions) as avg_duration,

SUM(rs.avg_cpu_time * rs.count_executions) as total_cpu_time,

SUM(rs.avg_cpu_time * rs.count_executions) / SUM(rs.count_executions) as avg_cpu_time,

SUM(rs.avg_logical_io_reads * rs.count_executions) as total_logical_io_reads,

SUM(rs.avg_logical_io_reads * rs.count_executions) / SUM(rs.count_executions) as avg_logical_io_reads,

SUM(rs.avg_rowcount * rs.count_executions) as total_rowcount,

SUM(rs.avg_rowcount * rs.count_executions) / SUM(rs.count_executions) as avg_rowcount,

qt.query_sql_text,

CAST(qsp.query_plan as XML) AS query_plan

FROM sys.query_store_query qsq

INNER JOIN sys.query_store_plan qsp

ON qsp.query_id = qsq.query_id

INNER JOIN sys.query_store_query_text qt

ON qt.query_text_id = qsq.query_text_id

INNER JOIN sys.query_store_runtime_stats rs

ON rs.plan_id = qsp.plan_id

WHERE

rs.last_execution_time > DATEADD(DAY,-2, GETUTCDATE())

--AND qsq.object_id = OBJECT_ID('dbo.User_GetByReputation')

--AND qt.query_sql_text like '%%'

GROUP BY

qsq.query_id,

qsp.plan_id,

qsp.query_plan,

qt.query_sql_text

This is a good general Query Store query I’d use when reviewing a specific procedure or query. I can always modify from this if I need something specific.

Summary on Useful Metrics

I tend not to use the UI for Query Store often. I’d rather write queries to look at the details myself. I only recently saw the value of the rowcount field; that’s the main reason I wanted to write this blog.

There’s always more to learn.

You can follow me on Bluesky (@sqljared) and contact me if you have questions. My other social media links are at the top of the page. Also, let me know if you have any suggestions for a topic for a new blog post.